|

- Keywords: Unity - Hand Gestures - Meta Quest - VisionPro Prototype - Hand Menu

|

A Nice Gesture for XR

What is a Good Hand Gesture in an XR Experience? And how to measure its success? As hand recognition has become more accurate, faster, and fairly reliable, Gestures are becoming the primary input in XR headsets. Still, designing a gesture with unclear principles and lacking evaluation methods can be tricky. Could findings from neuroscience help in designing and measuring hand gestures? Let’s see if making a good hand gesture for walking in VR and measuring its success is possible based on the findings.

|

|

What are the current principles for designing a Hand-Gesture? Below are some principles collected from studies, papers, Meta and Apple’s guide for designing hand gestures:

. Reliable and free from error. . Easy to learn. Logically and simply explainable. Memorable. . Socially acceptable. No gesture conflict (Avoid conversational gestures). . Make it comfortable. Minimize muscle usage. Low cognitive workload. . Accessible to everyone. . Respectful to multi-modality and good usability. . Provide feedback. . Natural and unambiguous. The gesture should fit the experience. Use familiar gestures that match people’s expectations. Can a good hand gesture for walking in VR be made using these principles? While some guides, like providing feedback or socially acceptable gestures, are clear to understand, others aren’t very clear. For instance, what indicates the comfort of a gesture, and how to measure it? Is it the number of used muscles? Why can people play Beat Saber for an hour none stop, but performing air tap in HoloLens causes fatigue in 5 minutes? Let’s leave the questions aside and look at some hand gestures for walking in VR. |

|

Contact the middle finger with the thumb to walk (VRChat).

|

|

All these creative solutions meet the guidelines to different extents. However, each has downsides like lack of multimodality (can’t shoot and walk simultaneously), and none of these methods are widely used. Plus, there is no standard way to compare the gestures other than user feedback which can be biased, and the feedback for one gesture can be different in various experiences. So, what can be done to change this situation?

If a hand gesture causes fatigue, the user’s attention will shift from its task to the fatigue, which is why creating a gesture that doesn’t cause fatigue is better. What if it’s ok to cause fatigue; but without shifting the user’s attention from its primary task? In Beat Saber, Users’ attention doesn’t shift shortly despite all the movements, compared to air taps in HoloLens. Shifting attention to a VR experience is so powerful that an FDA-approved VR system for chronic pain reduction exists. So, instead of making a gesture that is easy to learn, that doesn’t cause fatigue, etc., let’s make a gesture that doesn’t shift the user’s attention from their task to something else. What is Attention, and how can it help in making Hand-Gestures?

Human Attention System is a broad topic, so only some of the findings will be mentioned in this article. Michael Posner’s findings in attention systems are the main source for this article. Here is one of the findings:

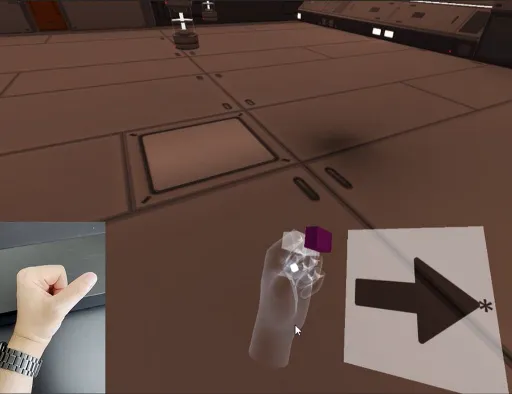

Humans can only pay attention to one subject at once. Multi-tasking is a myth. What our brain does is switch attention between tasks quickly. This is why driving a car and listening to the radio simultaneously is possible. Attention switches can cost errors and cause less efficiency in a task. Based on this point, an ideal hand gesture is the one that has the minimum attention switch cost. There are overlearned tasks that can be performed without paying attention. Think of riding a bicycle. Once you bike regularly for some time, you will no longer pay attention to how to ride the bike. Keeping your balance will become Automatic. Let’s call automatic actions/processing, Habitual actions for better wording. What habitual action can be borrowed from daily life to create a hand gesture for walking in VR? The joystick is the one selected for this article. Many people have the muscle memory to use it effortlessly, making it a good candidate for a Habitual gesture. Here are some short clips from the gesture in a Unity demo running on Meta Quest 2:

How to evaluate the success of the gesture? Shifting attention can be measured by Reaction Time (RT). A task with visual cues is designed, and testers react to the cues through an input system. Cues with conflicts will be added to involve more users’ attention. Lower RT to the cues indicates a faster attention shift, a positive indicator for an input method.

Test Design

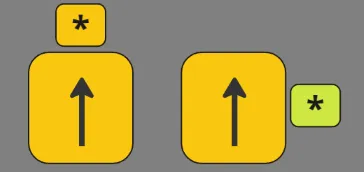

To test the walking gesture, simple tasks in VR were designed for the testers to react. The test is done two times. Once using the hand gesture and then using the arrows on a keyboard. Pressing arrows on the keyboard is assumed to be a habitual action that would cause a low attention shift. The average RT for the gesture will be compared with the keyboard. The tester wouldn’t walk in the virtual environment. It would simply look at the cue and move their fingers.

Correct test scenario when the arrow direction matches the asterisk.

Test Preparation and Process

Test Results

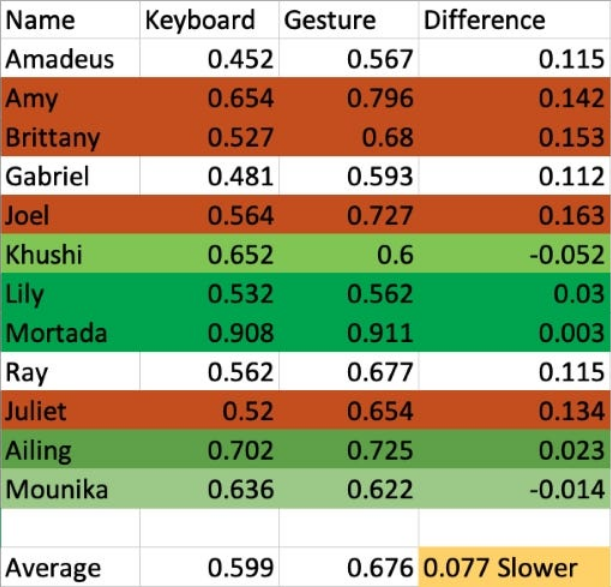

On average, the RT for the keyboard is 0.077 seconds faster than the hand gesture. This means the keyboard is performing slightly better than the hand gesture. For some testers, the hand gesture had better performance. Here are the results for each Tester:

Table for each tester in seconds. Reds are higher gesture RT. Greens are lower or equal gesture RT.

After the test, the testers were asked the following questions (the questions are not part of the standard attention test):

1- How often do you play games that need a joystick? 60% play games often. 2- Have you used VR before? 70% said yes. 3- Did you feel any fatigue? 5 out of 12 felt fatigued in their finger! 4-Did you have to remember how to walk? All testers responded No. 5-Was it comfortable to use the controller? Only one tester answered No! 6- Was it natural to hold your hand and fingers in the way to make the gesture? 2 testers felt unnatural. It’s interesting how testers responded differently to comfort and fatigue. And it's important to point out that the fatigue happened mostly because their thumb couldn’t reach the directions easily due to the low precision of hand tracking in Quest 2. The Outcome

Though the hand recognition in Quest 2 is partially reliable, the results are promising. Hand recognition is improving, and with technologies like EMG or BCI, it can be precise if done well. The article’s main point so far is to answer the two questions. What is a Good Hand Gesture in an XR Experience? And how to measure its success? With the Reaction Time, gestures can be measured and compared. Lower reaction time means better gestures regarding attention shift and not performance. The walking gesture is imperfect but feels natural and easy to learn. Note that I only used Habitual action to design the gesture. There are more findings about the attention that could be used to improve the design. The gesture was designed to solve the attention shift problem, not fatigue, learning time, etc.

Can Attention findings be used to enhance other Design aspects of XR?

Left: MRTK Halo effect on the “Hand Joint” button — Right: Vision Pro Hover Effect.

While these feedback are great for ensuring users that their finger is close to the button and fixing the lack of tactile feedback a bit, they don’t reduce the amount of attention the user has to spend to get their finger to the right place and to avoid touching other buttons by mistake. How can the button’s design help users be less precise in reaching out to a button by spending less attention? Here is an inspiration: Make the intended button bigger.

Apple Watch Menu

In the Apple Watch, the central icons are bigger, so the user can see them better and touch them with a lower chance of error. If you own an Apple watch, you know that pressing an app icon in the menu is easier than pressing a button in XR. Inspired by the Apple watch design, I made a hand menu in the walking demo for users to select different controller sizes and speeds. When the user’s finger gets closer to a button, the button gets bigger, and other buttons get smaller. A bigger target is easier to hit. Therefore, less attention is spent, to be precise. It feels much easier to press buttons, and it’s possible to hit them without directly looking at them. I might write another article about typing in XR using this and other methods.

What about Attention in handheld AR?

Placing an object in the world is a typical interaction in handheld AR. Before looking at examples from the IKEA app, remember that our mind doesn’t like unnatural events. Anything that seems unusual will cause an attention shift.

IKEA — Chair Placement in AR

In the IKEA app, the chair goes beyond the wall and has the wrong direction, which is unusual. Also, a Chair usually faces the opposite direction of a wall. Attention shift can decrease if the chair can’t pass walls and detects walls to orient itself accordingly. This is another example of putting attention at the center of XR design.

AR Placement Prototype.

Conclusion

Using attention in design is not new. There are many studies and guides about users’ attention to design interactions and interfaces for 2D displays. However, there are few studies for users’ attention to design for XR. I think many gaps in XR design can be filled by involving users’ attention at the center of the design for XR. Because, in my opinion, attention directly connects with immersion, presence, and experience. One gap for me was not knowing how to make a Hand Gesture. Where to start the design and how to evaluate my design. I wanted to share my findings and journey researching the topic. I hope this can help for a better design future.

Download the Demo

You can download the VR demo to test walking and interacting with the buttons. The demo only works on Quest 2!

|